Lecture 21 - Codesign Challenge

- Introduction

- Structure of the code

- Code profiling

- Performance evaluation on the back of the envelope

- Floating point hardware

- Gradual improvement saves the day

Introduction

The Codesign Challenge has been posted online. The following provides some background discussion on how to handle the design problem. This assumes, of course, that you have already carefully read the assignment!

The assignment only concerns the inference part of an image classification problem: from images to image labels. The (original reference code)[https://github.com/SamCymbaluk/CNN] of the problem is based on a neural network library in C. The neural network library includes the training part as well as the inference part. We are using a classic four-layer network with 784 nodes in the first layer, 16 in the second, 16 in the third and 10 in the final layer.

Consult the reference program to see how the 60,000 MNIST images are converted to 13,002 neural network weights. The 13,002 weights come from 784.16 + 16.16 + 16.10 edge weights and 16+16+10 biases. All these numbers, and all computations in the reference implementation are performed in single-precision floating point.

Structure of the code

The software of the reference implementation is stored in software/neuralnetwork.

The following is a list of the files and their purpose. The files that

deserve special attention are indicated in bold.

| File | Purpose |

|---|---|

| cnn.h | Top-level include for neural network library |

| mnist_infertest.c | Benchmark program |

| imagecoef.h | Image coefficients for 100 MNIST images |

| trainingcoef.h | Training coefficients for the {784,16,16,10} network |

| neuralnet.{c,h} | Top-level neural network initialization and learning |

| tensor.{c,h} | Tensor functions |

| loss_functions.{c,h} | Loss functions |

| functions.{c,h} | Sigmoid functions |

| optimizer.{c,h} | |

| dataset.{c,h} | Image data structure definition |

The universal data structure in this program is the tensor, an n-dimensional vector.

A single data structure is thus used to hold tensor data regardless of their dimensionality.

For example, a vector of 10 elements has shape {10, 1}, while a 5-by-5 matrix

has shape {5,5}.

struct Tensor {

unsigned int rank; // number of dimensions + 1

unsigned int* shape; // depth of each dimension

size_t size; // total number of elements

float* data; // data as linear array

};

typedef struct Tensor Tensor;

The top-level program loads images as tensors before computing the inference. The images are given as byte-arrays, but are converted to floating point greyscale values between 0 and 1.

// load images

Tensor **imagearray;

unsigned int tensorShapeImage[] = {IMAGE_SIZE, 1};

imagearray = calloc(NUM_IMAGES, sizeof(Tensor*)); // 100 images

for (int img = 0; img < NUM_IMAGES; img++) {

Tensor* imgTensor = newTensor(2, tensorShapeImage);

for (int c = 0; c < IMAGE_SIZE; c++) {

imgTensor->data[c] = (float) ((float) testimage[img * IMAGE_SIZE + c] / 255.0);

}

imagearray[img] = imgTensor;

}

For each image, the top-level program also loads a label, which describes the number represented in this image. The label is loaded into a {10,1} tensor (even though only a single digit is needed to describe the label).

Tensor **labelarray;

unsigned int tensorShapeLabel[] = {10, 1};

labelarray = calloc(NUM_IMAGES, sizeof(Tensor*));

for (int img = 0; img < NUM_IMAGES; img++) {

Tensor* labelTensor = newTensor(2, tensorShapeLabel);

unsigned int index[] = {0, 0};

index[0] = testlabel[img];

*getElement(labelTensor, index) = 1;

labelarray[img] = labelTensor;

}

Each image and label is then combined together into a Datum. A single datum thus consists of two tensors, one holding image data and one holding an image label.

Datum* testData = calloc(NUM_IMAGES, sizeof(Datum));

for (int img = 0; img < NUM_IMAGES; img++) {

testData[img] = (Datum) {

.x = imagearray[img],

.y = labelarray[img]

};

}

free(imagearray);

free(labelarray);

The top-level program then instantiates a neural network consisting of four layers of tensors, then computes the inference on 100 stored images.

// Initialize neural network

unsigned int nnShape[] = {784, 16, 16, 10};

NeuralNet* nn = newNeuralNet(4, nnShape, MeanSquaredError);

loadMemNeuralNet(nn); // this function extracts image data from imagecoef.h

for (unsigned i = 0; i<100; i++) {

Datum datum = testData[i];

copyTensor(datum.x, nn->input);

forwardPass(nn);

printf("Test Number %d: Network prediction: %d Expected: %d Confidence: %f%%\n",

i,

argmax(nn->output),

argmax(datum.y),

100*nn->output->data[argmax(nn->output)]);

}

The bulk of the work in a ‘forward pass’ consists of matrix multiplications. Each tensor is multiplied with a corresponding coefficient vector with training data. At the end of each layer, a bias is added and the output sum is fed into a non-linear sigmoid. Among these operations, the matrix multiplication does the bulk of the work.

void forwardPass(NeuralNet* nn) {

// A_n = sigmoid (W_n * A_(n - 1) + B_n)

for (int n = 1; n < nn->depth; n++) {

// A_n = W_n * A_(N - 1)

matmul(nn->weights[n - 1], nn->layers[n - 1], nn->layers[n]);

// A_n = A_n + B_n

add(nn->layers[n], nn->biases[n - 1], nn->layers[n]);

// Store pre-activation values in z

if (nn->train) copyTensor(nn->layers[n], nn->zs[n]);

// A_n = sigmoid (A_N)

if (n == nn->depth - 1) {

softmax(nn->layers[n]); // Apply softmax instead of sigmoid on final output layer

} else {

sigmoid(nn->layers[n]);

}

}

}

Code profiling

The best starting point for this program is to study how the program

works. You can run gprof on the program. The profile profile.txt

provided in the reference shows to top-level usage of the compute

cycles.

The top-contenders include floating point operations (__addsf3 and __mulsf3) and

integer multiplication (__mulsf3). We also note that a large portion of the

execution time is spent in matmul. This should not come as a surprise;

the Nios II/e has no floating point hardware, and no hardware multiplication.

Therefore, it has to emulate these operations using software instructions.

Each sample counts as 0.001 seconds.

% cumulative self self total

time seconds seconds calls s/call s/call name

22.49 34.75 34.75 1300200 0.00 0.00 __addsf3

21.69 68.27 33.52 7423319 0.00 0.00 __mulsi3

18.08 96.19 27.93 1296100 0.00 0.00 __mulsf3

13.47 117.00 20.81 300 0.07 0.37 matmul

6.92 127.70 10.70 alt_get_errno

2.61 131.73 4.03 87201 0.00 0.00 altera_avalon_jtag_uart_write

2.16 135.06 3.33 51711 0.00 0.00 __muldf3

1.25 136.99 1.93 79400 0.00 0.00 __divsf3

1.00 138.53 1.54 34400 0.00 0.00 __umodsi3

The analysis of top-used functions must be combined with call-graph

analysis. For example, we find that matmul is the fifth most

function in terms of cumulative execution time. This confirms that

the numerical workload of __addsf3, __mulsf3, and __mulsf3 comes

from matmul.

20.81 90.87 300/300 forwardPass [4]

[5] 72.3 20.81 90.87 300 matmul [5]

34.64 0.09 1296000/1300200 __addsf3 [6]

27.93 4.81 1296000/1296100 __mulsf3 [8]

23.41 0.00 5184000/7423319 __mulsi3 [7]

0.00 0.00 900/1100 subtensorSize [89]

It is useful to evaluate how each profiled function contributes to the workload. For example, consider altera_avalon_jtag_uart_write and __muldf3 which hold the sixth and seventh position in the most-often used functions. Clearly, the UART function does not perform a communication but rather input/output, and therefore this function should not be targeted for hardware acceleration.

__muldf3 is an interesting case, as well. From the call graph, we can see that the function is invoked by _dtoa_r, which does not perform a computation. Instead, _dotoa_r appears to be part of printf formatting.

0.07 0.08 1100/51711 _dtoa_r [52]

3.26 3.66 50611/51711 __ieee754_exp [11]

[18] 4.6 3.33 3.74 51711 __muldf3 [18]

3.74 0.00 827376/7423319 __mulsi3 [7]

0.01 0.25 100/100 ___vfprintf_internal_r <cycle 1> [34]

[52] 0.2 0.01 0.25 100 _dtoa_r [52]

0.07 0.08 1100/51711 __muldf3 [18]

0.01 0.03 100/8500 __divdf3 [24]

0.02 0.00 950/47294 __subdf3 [31]

0.01 0.00 1000/5737 __floatsidf [67]

0.01 0.00 400/19737 __adddf3 [37]

0.00 0.00 100/100 __d2b [93]

0.01 0.00 900/5637 __fixdfsi [79]

0.00 0.00 100/200 _Balloc [88]

0.00 0.00 200/141910 __ltdf2 [32]

0.00 0.00 150/5350 __gedf2 [75]

0.00 0.00 199/199 _Bfree [201]

0.00 0.00 100/300 __nedf2 [199]

Hence, a first-order analysis shows that the bulk of the computations is spend on matrix multiplication, and on emulating hardware multiplication and single-precision floating-point computation.

Remember that the code profile shows the amount of cycle spent on computation (e.g. executed functions), but that the actual cycle cost covers storage access, communication overhead, and computation. Hence, you have to look at the profile numbers while keeping the architecture of the design in mind.

Performance evaluation on the back of the envelope

While optimizing the design, we have to keep track of the level of optimization and the relative amount of ‘headroom’ left for improvement. For example, a resource with a utilization of 1% is grossly underutilized and hence will support aggressive optimization. On the other hand, a resource that is at near-maximum capacity is on the verge of becoming a bottleneck. Increasing its utilization will create a bottleneck.

To get a sense of these utilization factors, you can do back of the envelope calculations. For example, the reference takes 6.52 billion cycles for 100 images, which is 65.2 million cycles per image. From the profiling, we know that the bulk of the cycles are spent on matrix multiplication. The matrix multiplications in this neural network have the following form.

- Layer 1:

(16,784) x (784,1) - Layer 2:

(16,16) x (16,1) - Layer 3:

(10,16) x (16,1)

// Perform the actual matrix multiplication

for (int aRow = 0; aRow < aRows; aRow++) {

for (int bCol = 0; bCol < bCols; bCol++) {

for (int aCol = 0; aCol < aCols; aCol++) {

// C[aRow][bCol] += A[aRow][aCol] * B[aCol][bCol]

*(cPtr + (aRow * bCols) + bCol) +=

*(aPtr + (aRow * aCols) + aCol)

* *(bPtr + (aCol * bCols) + bCol);

}

}

}

Given this computation, we next break this down into memory-read operations,

memory-write operations, and multiply operations. The multiplication

of two matrices with (rows, columns) equal to (A,B) and (B,C) respectively,

leads to 2.A.B.C read operations, A.B.C write operations and A.B.C multiply

operations.

Therefore, each of the layers contributes the following memory accesses and computational loads. The first layer clearly dominates the computational load and memory access load. In addition, the amount of cycles spent for these operations (over 65 million cycles) is enormous. As these operations are uniformly spread in time, we see that there are several thousand cycles between each read/write/multiply. Furthermore, we know from earlier experiments that a read and write does not take more then a few (10) cycles. Thus, the bulk of the cycles is being consumed by floating point computations (including additions and multiplications).

| Layer | Reads | Writes | Multiply |

|---|---|---|---|

| 1 | 12544 | 6272 | 6272 |

| 2 | 256 | 128 | 128 |

| 3 | 160 | 80 | 80 |

| Total | 12960 | 6480 | 6480 |

We conclude as follows. The bulk of cycles is spent on floating point operations. Accelerating those floating point operations will be the key to improving performance. Keep in mind that the time needed to compute a floating point operation, in the current setting, is determined by software and by the CPI of the processor (which is very low for a Nios II/e)

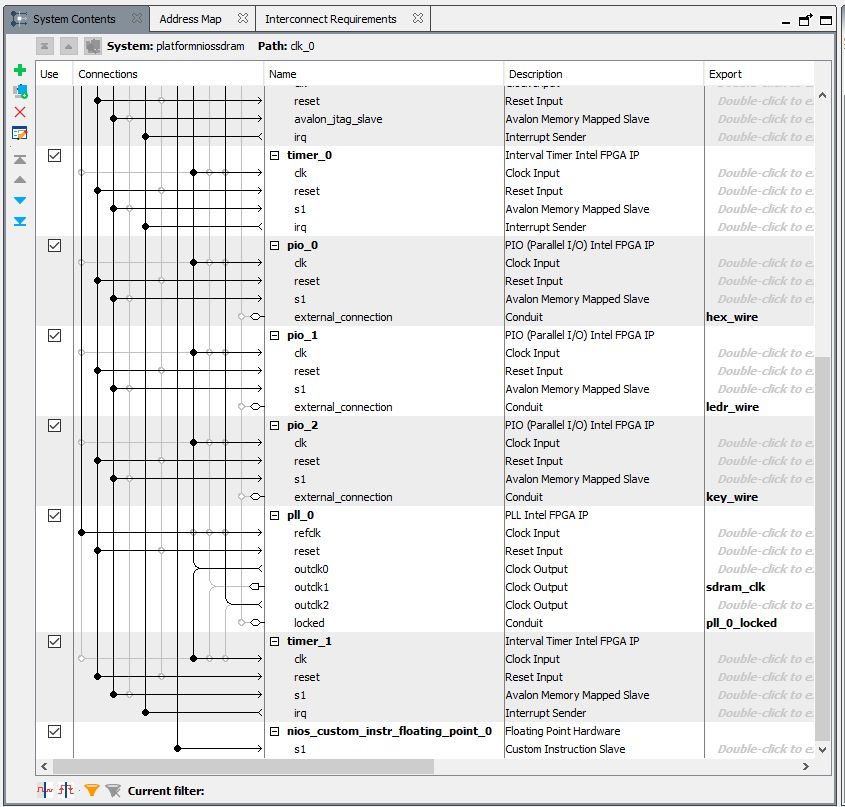

Floating point hardware

Adding floating point hardware to the design can be done in several ways. The fastest, most convenient method is to include a floating point custom instruction to the Nios-II/e. However, a floating-point custom-instruction may not be the ideal final option as the final implementation.

There are other sources of floating-point hardware as well. For our design we need an IEEE 754 compliant adder and multiply. There is a floating-point division, as well, but it is used far less extensively.

After adding the floating-point custom-instruction, you have to re-generate

the board-support package. The custom-instruction is picked up by the BSP generator

and included in the software generation. After recreating the Nios executable,

you’ll find custom instructions in the disassembled code.

$ grep custom main.objdump

4000350: 10c5ffb2 custom 254,r2,r2,r3

4000354: 8085ff32 custom 252,r2,r16,r2

40003e8: 1887ffb2 custom 254,r3,r3,r2

4000424: 2085ffb2 custom 254,r2,r4,r2

4000428: 1885ff32 custom 252,r2,r3,r2

4000430: 1885ff72 custom 253,r2,r3,r2

4000bec: 1885ff32 custom 252,r2,r3,r2

400273c: 1887ffb2 custom 254,r3,r3,r2

4002804: 1885ffb2 custom 254,r2,r3,r2

4002808: 2087ff32 custom 252,r3,r4,r2

...

Gradual improvement saves the day

As you start making changes to the design, remember the golden rule that you always keep a fully functional design available that you can use as a fall-back when things go astray. Here are two development tricks that help you in achieving that goal.

Make a backup of code before making changes

A simple way to achieve this is to create copies of functions as you transform the program. Let’s say that, initially, you have the following outline.

main

+ forwardpass(...)

+ matmul(...)

Before making changes to matmul, first make a copy matmul_accelerate and

then change all functions in the calltree leading up to matmul:

main

+ forwardpass_accelerate(...)

+ matmul_accelerate(...)

If the program breaks, at some point, you can now debug by replacing functions with their original copy, starting from the innermost level of the program.

Run a version of the program locally (X86) before porting it to Nios

Especially in the early stages of development, you’ll find it convenient to create a port of your program to X86 before running it on Nios. The changes over the original testbench are minimal: simply make the condition of Nios-specific code conditional.

For example, the macro NONIOS can be used be remove code that you don’t want

to use on the X86 version:

#ifndef NONIOS

lap = alt_timestamp() - ticks;

if (verbose)

printf("Lap cycles: %lu\n", lap);

#endif

Next, you can create a makefile Makefile.x86 that runs the compilation under the

NONIOS macro.

mnist_infertest.exe: dataset.c functions.c loss_functions.c mnist_infertest.c neuralnet.c optimizer.c tensor.c

gcc -DNONIOS dataset.c functions.c loss_functions.c mnist_infertest.c neuralnet.c optimizer.c tensor.c -o mnist_infertest.exe

clean:

rm -f *.o *.exe

To create a version of the program native, on X86, use make -fMakefile.x86.