Lecture 12 - The On-chip Bus environment (2)

Introduction

In the last lecture, we discussed the basics of SoC and the on-chip bus as the most important interconnect mechanism for SoC. We recall the essentials.

-

An on-chip bus contains four types of wires: address wires, data wires, command wires, and synchronization wires.

-

Every component interface on an on-chip bus is of the master type or the slave type. A master initiates a bus transaction by acquiring the bus from a bus arbiter, broadcasting a bus address and a bus command, and completing a data transfer. A slave responds to a master when the publicized address matches the slave address.

-

A bus is segmented using bridges, a hybrid component that acts as a bus slave on one bus segment, and as a bus master on the other. Bus bridges, in combination with improved bus transfer protocols, are the key to increasing communication parallelism in the SoC environment.

-

‘Simple’ bus transactions are common for peripheral bus interfaces. A simple bus transaction means that the slave has a single handshake return signal to indicate completion of a transfer (consisting of address decoding followed by data transfer). Such a simple bus transaction may stall a bus when the slave is not quick enough to respond.

-

When multiple masters request the bus at the same time, the bus arbitration process decides what master will own the bus in the coming transaction. Bus arbitration requires a bus arbiter who resolves competing master requests following a priority resolution protocol.

-

There are three kinds of ‘coprocessors’ one will commonly find in an SoC. A memory-mapped coprocessor is a bus slave that is addressed from the software using memory load/store instructions. A tightly-coupled coprocessor is connected to the processor over a specialized bus, and it is accessed from software through dedicated instructions. Finally, a custom-instruction coprocessor is fully integrated into the processor architecture and involves modifying the processor architecture to create new ‘instructions’ to access the new hardware.

Today we discuss several more advanced bus mechanisms that deal with the limitations of simple buses. We’ll discuss bus locking, and how that can be used to implement semaphores. We’ll talk about wordlength issues on the bus. We’ll talk about performance-boosting techniques.

We will also discuss two bus systems in more detail: the Avalon bus, used by Platform Designer, and the AMBA AXI bus, used by ARM based systems including the ARM A9 in the Cyclone-V chip on your FPGA board.

Better Buses

We will discuss three improvements in bus systems. The first, bus locking, exclusively allocates bus control to a master over multiple sequential bus transactions. The second, bus transaction splitting, is a performance-enhancing technique for high-speed buses. Bus transaction pipelining is used in combination with bus transaction splitting to obtain overlapped execution. The third, bus transfers, is used to speed up the back-to-back transfer of multiple data items.

Bus Locking

Bus locking refers to the ability of a bus master to lock the bus over multiple transfers. You may do this because of performance constraints, or else because the access sequence of a master needs to be guaranteed. Let’s first consider the implementation of the lock concept itself. Bus locking is done at the level of the bus arbiter. After a bus grant, a bus master can assert a lock signal. The lock prevents the bus arbiter from granting additional bus requests (from any master) as long as the lock is in place. Thus, the bus lock signal if generated by the bus master and observed by the bus arbiter.

Table: Signals for Bus Arbitration

| type | signal | Purpose |

|---|---|---|

| Command | m_reqi | Master i bus request signal, asking for bus access |

| Command | m_granti | Master i bus grant signal, releasing the bus to master i |

| Command | m_locki | Master i locks the bus after receiving a bus grant |

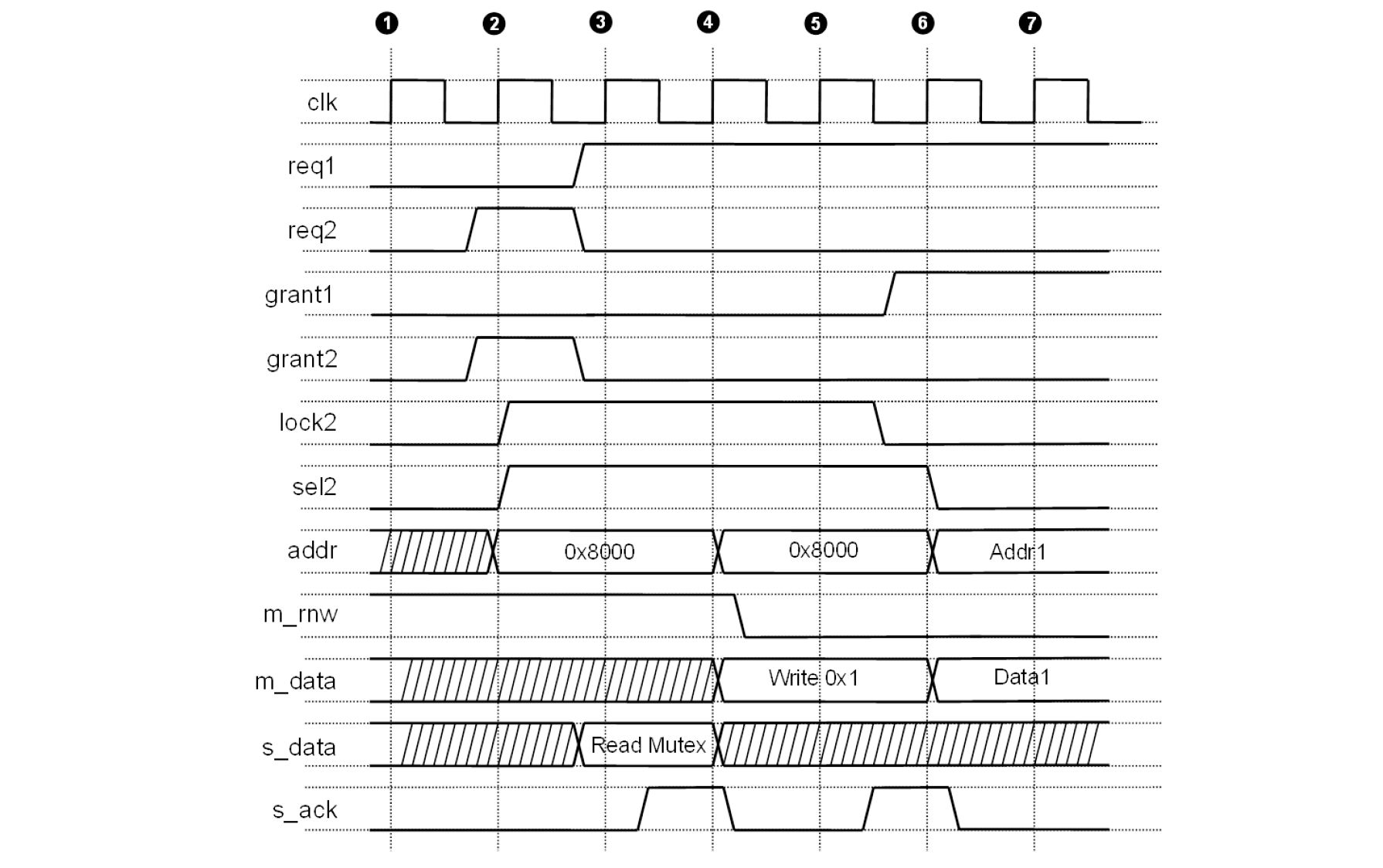

The following figure describes the activities using a bus lock event. Bus

master 2 requests and receives a bus grant in cycle 2. When asserting

the bus in cycle 3 (using sel2), the bus master also locks the bus (using lock2)

and keeps the lock through cycle 5. That means that further bus requests from

master 1 (using req1) in cycle 3, 4 and 5 will be ignored by the arbiter.

Using bus locking, master 2 can do back-to-back operations that are guaranteed to be uninterrrupted by another bus transaction. In this case, master 2 performs a read operation in cycle 3 (acknowledged in cycle 4), followed by a write operation in cycle 5 (acknowledged in cycle 6). Hence, the bus locking mechanism ensures that master 2 is able to perform a read followed by a write.

Figure: Bus Locking

An application for bus locking is the implementation of semaphores, discussed earlier in the lecture in hardware/software synchronization. Implementing a semaphore between two masters that share a shared memory cannot be done using simple bus transfers alone. This becomes clear when we consider the elementary operations on the semaphore, P and V.

P(S): if the semaphore is available, take it

if the semaphore is unavailable, wait until it is available

V(S): release the semaphore

Assume that we would implement the semaphore S as a memory-mapped register, accessible from two masters. The following is a trivial (but wrong) implementation of P and V:

volatile unsigned *S = 0x8000; // semaphore slave address

void P() {

while (*S != 0)

*S = 1;

}

void V() {

*S = 0;

}

What is the problem with this design? Imagine that two different masters

would execute P() at the same time. Both masters are able to

read the semaphore (while (*S != 0)) before either of them is able

to write it (*S = 1). If that happens, both masters would be able to execute P simultaneously, and both would have obtained the lock on the semaphore.

Obviously, that breaks the semantics of the P operation, and the cause is

a race condition between two masters.

Bus locking can prevent this problem. Assume that we have a low-level system call

called _lock_bus() which, when used, will lock the bus upon the next master read or write, and keep it locked until the next unlock_bus(). With such a primitive, one

can write a testandset operation, which in turn is useful to build a semaphore.

Some processors can directly implement an atomic testandset instruction, specifically

for this purpose.

volatile unsigned *S = 0x8000; // semaphore slave address

int testandset() {

int a;

_lock_bus();

a = *S;

*S = 1;

_unlock_bus();

return a;

}

void P() {

while (testandset());

}

void V() {

*S = 0;

}

Bus Transaction Splitting and Pipelining

In the discussion of bus transfers so far, we limited every slave response to a single acknowledge command, at the end of the bus transfer. As a result, a bus transaction locks up a bus over multiple clock cycles. The lock starts from the moment the master grabs the bus. The lock lasts, up to the moment that the slave acknowledges the end of the data transfer. We have already seen one example where such a bus transfer protocol causes a slow-down in the full system. When a bus master communicates through a bridge, then the bus system driving the bridge is delayed until the slave at the other side of the bridge can respond.

High-speed buses, therefore, define an address phase and a data phase for each transaction and perform acknowledgment on each phase separately. The idea is that address decoding by a slave can be done while accepting or returning data can take more time. To accomplish this, we add additional command signals to the bus.

| type | signal | Purpose |

|---|---|---|

| Command | m_addr_valid | Master address valid signal (per master) |

| Command | s_addr_ack | Slave address acknowledge (per slave) |

| Command | s_wr_ack | Slave data write acknowledge (per slave) |

| Command | s_rd_ack | Slave data read acknowledge (per slave) |

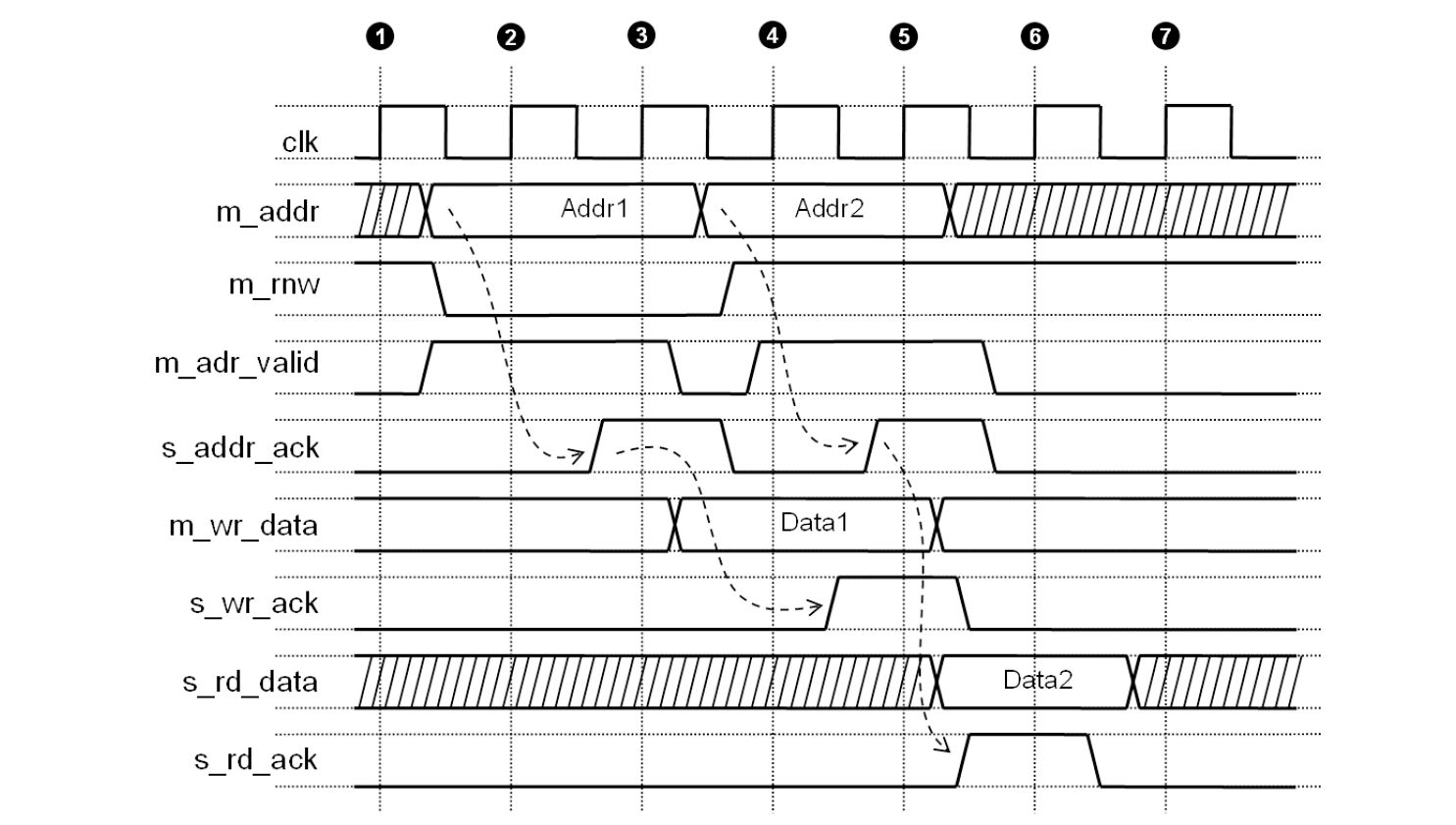

The following figure illustrates the bus pipelining in action. The master

initiates a transaction in cycle 2. The slave acknowledges this transaction

in cycle 3 by asserting the slave address acknowledge. This transaction

is marked as a write, and therefore the master knows that the data to

be written at address Addr1 can be transmitted as soon as a slave

acknowledges the address. Finally, in cycle 5, the slave

acknowledges the data being written. Note that this entire bus transaction

took 4 clock cycles, from cycle 2 until cycle 5.

However, because of bus pipelining, a second address phase can start as soon

as the first address phase has completed (in cycle 3). The master can

initiate a second transaction, a read from address Addr2 in cycle 4.

Again, the slave acknowledges the address in cycle 5, and eventually returns

data in cycle 6. The second bus transaction spans from cycle 4 to cycle 6.

Overall, thanks to bus pipelining we are able to complete a 4-cycle

and a 3-cycle transaction in just five clock cycles.

In cycles 4 and 5, both bus transactions are overlapping. Correctly, in cycle 4, you can see an address belonging to bus transaction 2, with the data pertaining to bus transaction 1. That is a challenge, in particular when we have to debug such a bus system using a logic analyzer.

Figure: Bus Transaction Splitting and Pipelining

Burst Transfers

The third performance-enhancing technique is the burst transfer. In a burst transfer, a single address phase is followed by multiple data transfer phases. The idea is that the data transfers all come from related addresses. A typical example is a cache line fetch from the main memory. When a processor experiences a cache miss, then an entire cache line will be read from memory, which main contains 4 or more consecutive words from memory. This is an ideal case for a burst transfer since all the words in a cache line come from consecutive addresses.

The following figure illustrates a burst transfer. A burst transfer comes with its own set of command wires that characterize the type of burst, which typically involves the address increment size. Once a burst transfer is started, the slave will complete back-to-back data phases. In the example, the master initiates a burst transfer in cycle 2, and the slave responds in cycle 3. From that point on, the addresses being written to the slave are defined by the burst transfer characteristic, which indicates an increment step size of 4. Burst transfers can be quite sophisticated in their addressing capability. However, they are essential for high-performance SoC.

Figure: Bus Burst Transfer

Bus Transfer Sizing

So far, we have kept silent about the width of a data transfer on a bus. We implicitly assumed that the bus was always as wide as the data items, we would want to read or write from the slave. In practice, of course, this is not true. A slave data bus may be smaller or wider than a master data bus. In that case, we have to adjust the width of the data bus in such a way that slave data is consistently mapped in the master address space.

To see why bus transfer sizing requires more than connecting the data wires from the bus together, consider the following design. A 16-bit slave has four half-word memory-mapped registers, arranged in consecutive slave addresses. The 16-bit slave connects to a 32-bit master, who views the address space in words rather than halfwords.

16-bit slave 32-bit master

slave_D[15:0] master_D[31:0]

SLAVE ADDRESS MASTER ADDRESS

0 HWORD0 0 HWORD1 HWORD0

1 HWORD1 1 HWORD3 HWORD2

2 HWORD2

3 HWORD3

The master address space is filled with consecutive slave halfwords. That is because

it is what a programmer may reasonably expect. For example, HWORD0 to HWORD3 could

be locations in a memory, so that the programmer would want to use volatile unsigned

*short as the data type to access these locations.

However, what ‘logically’ makes sense precludes from simple connecting

the slave wires slave_D[15:0] to master_D[15:0]. That wouldn’t work! (why not)?

The better solution is to insert a multiplexer in front of the slave (writing the slave), and perform a word-extension after the slave (for reading the slave).

master slave master

master_D[15: 0] -->| 0 ---> master_D[31:16]

|-MUX-> slave_D[15: 0] ---> master_D[15:0]

master_D[31:16] -->| +

|

adr[1] >---+

There is a similar issue when a wide slave (say, 64-bit) is connected to a smaller master (say, 32-bit). Again, the address spaces of the slave and the master have to be matched.

64-bit slave 32-bit master

slave_D[63:0] master_D[31:0]

SLAVE ADDRESS MASTER ADDRESS

0 WORD1 WORD0 0 WORD0

1 WORD3 WORD2 1 WORD1

2 WORD2

3 WORD3

In this case, the problem exists at the output of the slave. We cannot simply connect the lower 32 bits from the slave to the master, that would throw away half of the slave double-word. Instead, we need to multiplex the data down.

master slave master

0 --> slave_D[63:32] -->|

|-MUX-> master_D[31:0]

master_D[31:0] --> slave_D[31: 0] -->| +

|

adr[2] --------------------------+

Examples of standard buses

We now consider two bus systems that we will use for practical designs. The first one, Avalon, is used to build the interconnect in Nios II-based SoCs. The second, AMBA AXI, us used to interconnect ARM processors. The AXI bus is used in the hardcore ARM in the Cyclone V FPGA.

Avalon

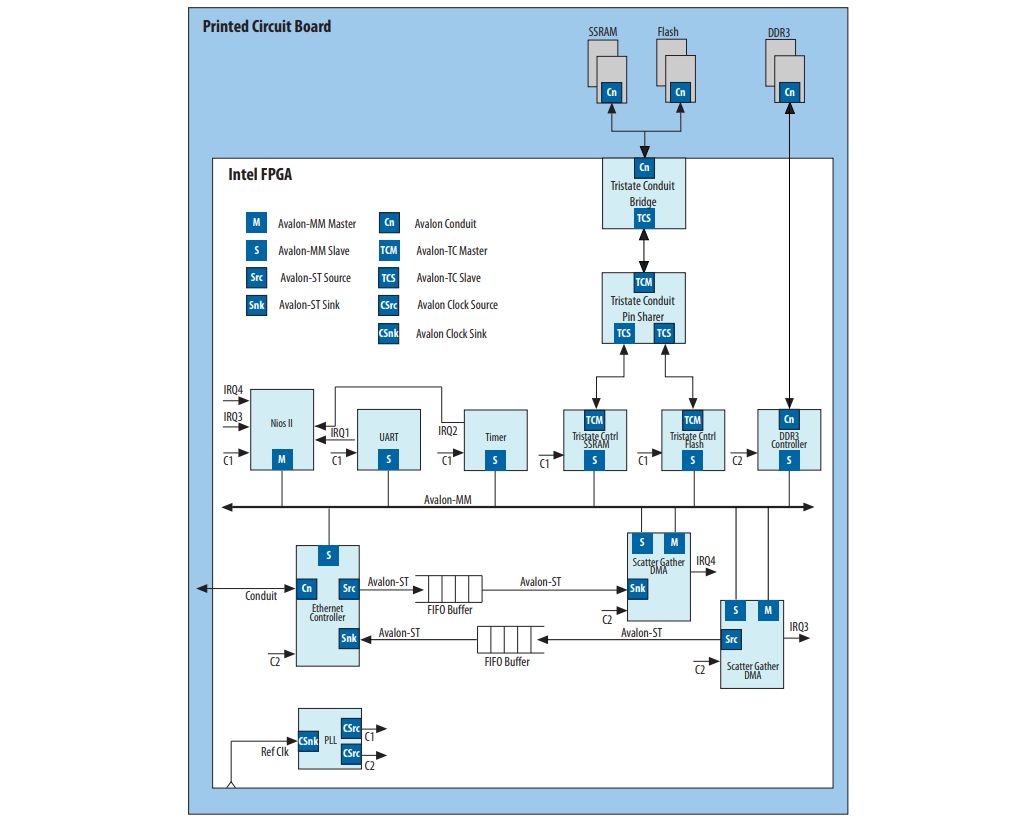

The Avalon bus is specified through a collection of interfaces discussed in the Avalon Interface Specifications manual.

The figure below illustrates an Avalon based system, taken from that manual. There are, in fact, multiple types of interfaces in Avalon.

-

Avalon Memory-Mapped interfaces correspond to the bus interface we discussed so far. This is the general bus interconnect mechanism. We distinguish Avalon Memory-Mapped Master interfaces and Avalon Memory Mapped Slave interfaces.

-

Avalon Streaming interfaces are point-to-point interfaces, intended for high-throughput dedicated connections. We distinguish Avalon Streaming Source Interfaces from Avalon Streaming Sink Interfaces.

-

Avalon Conduit interfaces are needed to build connections from an Avalon Based Platform to off-chip and off-platform components. Conduits are the Avalon-platform equivalent of pins on an FPGA chip.

-

Avalon Clock and Reset interfaces, and Avalon Interrupt interfaces, are special-purposes interfaces. They support the distribution of clock and reset signals in a platform. They wire up harware interrupts to the Nios II.

Figure: Overview of the Avalon Bus

All of these interfaces are highly configurable, and the interconnect is generated automatically by the Platform Designer. The synthesis is sophisticated and automatically handles most of the difficulties (such as selection of arbiters, and bus transfer sizing logic). Refer to Platform Designer Interconnect in the Intel Quartus manuals. In this lecture, we will only cover the Avalon Memory-Mapped interface.

An interesting feature of the Avalon-MM bus is how it handles bus arbitration. Rather than doing central arbitration for the overall bus, the arbitration is resolved per slave. That means that multiple masters can simultaneously issue a bus request and, if this request goes to a different slave, then these two transfers will be able to proceed simultaneously.

For this reason, if you consult more recent documentation on Avalon, you will notice that Avalon is described as an ‘interconnect fabric’ rather than a bus. Indeed, the idea of a central address/data bus is logically correct, but it does not correspond to the physical realization of this bus.

Figure: Interconnection fabric for an Avalon MM interface

We discuss a few examples of bus transfers for an Avalon Memory-Mapped interface. The Avalon Memory-Mapped interface is highly configurable and supports all of the features we discussed above. However, they are optional, and a slave (such as a memory-mapped coprocessor) can always opt to not support them.

| Signal | Width | Purpose | Used for |

|---|---|---|---|

address |

1-64 | Byte Adress | Simple Transfer |

byteenable |

2,4,8,16,32,64,128 | Specifies byte-select for partial word writes | Simple Transfer |

read |

1 | Command wire indicating a read operation | Simple Transfer |

readdata |

8,16,32,64,128,256,512,1014 | Data bus from slave to master | Simple Transfer |

write |

1 | Command wire indicating a write operation | Simple Transfer |

writedata |

8,16,32,64,128,256,512,1014 | Data bus from master to slave | Simple Transfer |

waitrequest |

1 | Slave requesting a wait state | Simple Transfer |

lock |

1 | Master output, bus lock request | Bus Locking |

readatavalid |

1 | Slave indicating read data phase completion | Transaction Splitting |

writeresponsevalid |

1 | Slave indicating write data phase completion | Transaction Splitting |

burstcount |

1-11 | Master indicating length of burst | Burst Transfer |

beginbursttransfer |

1 | Asserted during first cycle of a burst | Burst Transfer |

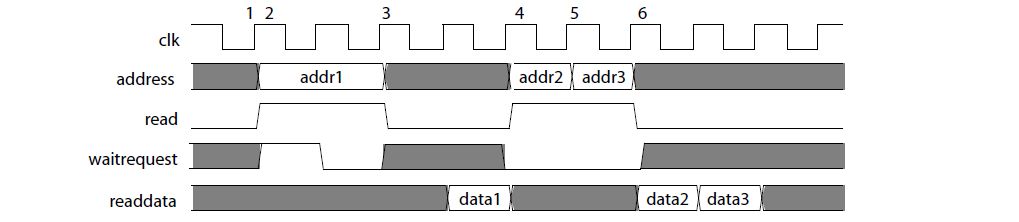

The most simple transfer is a read or write with a fixed number of wait states. In that case, the master will assert the command wires for a preset number of clock cycles. The following illustrates a read transaction with 1 wait state followed by a write with 2 wait states. Note that there is no slave acknowledge signal needed, as the length of a slave transfer is known. The fixed number of wait states is a property that has to be synthesized into the implementation. It is specified as a part of the slave interface (we will discuss later how to design and specify custom slave interfaces using platform designer).

Figure: Fixed-length transaction on Avalon: 2-cycle read and a 3-cycle write

For high-speed slave interfaces, transfers can be pipelined. The following is an example of a pipelined read transfer with fixed read latency. After master address is accepted, the slave will return data exactly two clock cycle later. As with the fixed-length transaction, the fixed read latency has to be specified as an interface property during synthesis.

Figure: Pipelined read transfer with 2-cycle fixed read latency

AMBA AXI

One of the most widely use bus standards is the AMBA (Advanced Microcontroller Bus Architecture). As with Avalon, it is a bus that comes in multiple flavors, covering high-speed interconnect, low-speed peripheral buses and streaming interfaces. The AMBA standard is defined by ARM and it is currently in its fifth generation. The AXI bus used in the Cyclone V chip is a third-generation AXI bus (AXI-3).

The AXI bus supports all advanced features we have discussed so far: separate address and data phases, burst control, pipelined transfers with variable latency. In addition, the AXI standard can manage out-of-order response from slaves. Most recently, the AXI standard was extended with coherency features. All of these optimizations have a similar goal: increase performance and concurrency in the system-on-chip.