Lecture 9 - Hardware/Software Synchronization

Introduction

Imagine the following experiment: you’re writing, in software, a loop that sends a series of data item to a hardware coprocessor. You’re using a memory-mapped register to talk to the hardware. So you would write the following loop.

unsigned items[NUM_ITEMS];

#define MEMMAPPED_REG (*(volatile unsigned *) HW_ADDRESS)

void senddata() {

for (i=0; i<NUM_ITEMS; i++)

MEMMAPPED_REG = items[i];

}

This begs the following question. If the hardware coprocessor can observe only

the output of the memory-mapped register, how does the coprocessor know

that the next value of the items[i] array has arrived? There is, in fact, no way

of knowing by looking only at the output of the memory-mapped register. Looking

for changes in the value of the memory-mapped register is not enough: items[i] could

contain repeated values.

To solve this problem in a generic manner, we first have to solve an underlying problem called synchronization. Synchronization, in this context, relates to the ability to exchange messages in an orderly manner. Since a hardware coprocessor operates fully in parallel with the software, one has to make sure that the hardware picks up the right sequence of data items.

In embedded systems design, both hardware and software, synchronization is used extensively. Synchronization is needed whenever two parallel entities need to communicate with one another across a shared memory. The shared memory could have many different embodiments: a register, a packet buffer, a RAM, and so on. Likewise, the entities could be hardware or software.

Synchronization solves the following two problems.

-

First, synchronization will make sure that the shared memory is not read before it is written.

-

Second, synchronization will make sure that the previous result is not overwritten with a new result before the previous result is read.

Because synchronization is such a broad concept, we will discuss it in the context of software as well as hardware. We will describe the semaphore as a generic software solution to synchronize concurrent threads of software. We will also discuss handshake signalling as a generic hardware solution for synchronization.

Synchronization Point

Figure: Synchronization Point

First, we define a generic concept called a synchronization point. Assume you have parallel entities A and B, and both of them are spinning in an infinite loop. A and B could be hardware of software, and it does not matter at this point. Also, the circles do not represent time, but rather the progress that A or B have made in their iteration loop. A and B can run at a very different speed, so that A completes a loop much faster then B, or vice versa.

A synchronization links a specific execution point for A with a specific execution point of B. In the figure, the symbols Sa and Sb are used to mark the execution point in the execution of A and B, respectively. The meaning of this synchronization point is this. When A arrives at Sa, and B has not yet arrived at Sb, then A will wait until B has reached Sb. Then, both A and B will transition simulatenously through the synchronization point. That is, A will pass Sa at exactly the same time as B is passing Sb. The synchronization point thus ensure that the execution of A and B are linked, but only at a specific point during their iterated execution.

The synchronization point is bidirectional. If B would arrive at Sb before A has arrived at Sa, then B will be waiting until A has reached Sa, then both A and B will transition simultaneously through the synchronization point, and after that both of them will continue at their own speed.

It’s clear that, by linking A and B in this manner, both of the iteration loops in A and B will run in lockstep. A is not able to get ahead of B, nor is B able to get ahead of A. This is true regardless of the execution speed of A and B, and regardless of the implementation of A and B (hardware or software).

We will next discuss several implementations of the synchronization point, in different embodiments.

Semaphore

A well-known software primitive to implement synchronization points is the semaphore. This is a software primitive that was proposed in 1962 by a famous computer scientist, Edsger Dijkstra. He was one of the first, if not the first, people to think about concurrent software.

The theory of semaphores is

broad and deep, and for our purpose we will only discuss the most simple case, namely

the binary semaphore. A binary semaphore can be in two possible states: taken or

not taken. Dijkstra defined two primitive operations on a semaphore: P(S) and V(S).

In this notation, S stands for a semaphore and P and V are two functions that

operate on the semaphore. The meaning of P and V are as follows.

-

If the semaphore is not taken, then

P(S)will take it and continue. On the other hands, if the semaphore is taken, thenP(S)will wait until it becomes available. -

V(S)will mark the semaphore as not taken.

We can now use P(S) and V(S) to build a synchronization point between concurrent software threads. In the following, it is assumed that two software threads

are both able to observe a shared memory that holds a data item shared_data,

as well as a semaphore S. Also, the programming examples use a hypothetical

C-like programming language and are intended only for illustration.

Single-semaphore synchronization point

Here is a synchronization point with a single semaphore.

Initially, entity(one) immediately takes the semaphore, while

entity(two) waits a short while. This guarantees that entity(one)

takes ownership of the semaphore. After that, entity(one) only

releases the semaphore after writing the shared_data. entity(two)

on the other hand, tries to take the semaphore before reading from

shared_data. Since entity(one) has initial ownership of the semaphore,

entity(two) will thus only proceed after entity(one) has updated shared_data.

int shared_data;

semaphore S1, S2;

entity(one) {

P(S1);

while (1) {

short_delay();

shared_data = ...;

V(S1); // -- sync 1

}

}

entity(two) {

short_delay();

while (1) {

P(S1); // -- sync 2

received_data = shared_data;

}

}

The program has a caveat, though. entity(one) will never stop before it

updates share_data. Instead, entity(one) assumes that entity(two) will always

be fast enough to read shared_data immediately after entity(one) returns

the semaphore with V(S1). Therefore, this synchronization point only works

when entity(one) is slower than entity(two). entity(two) always must arrive first

at the synchronization point. Hence, a single semaphore is not sufficient

to implement a full synchronization point with bi-directional synchronization

capability.

Dual-semaphore synchronization point

Here is an improved solution with two semaphores. You can verify, in fact, that this solution consists of two symmetric implementations of a single-semaphore synchronization point.

In this implementation, after entity(one) has written to ‘shared_data’ and

signalled entity(two) by calling V(S1), entity(one) will wait for entity(two)

to complete the reading of shared_data by synchronizing on a second

semaphore P(S2). These two semaphores S1 and S2 will keep both threads entity(one) and entity(two) in lockstep regardless of their execution speed.

int shared_data;

semaphore S1, S2;

entity(one) {

P(S1);

while (1) {

variable_delay();

shared_data = ...;

V(S1); // -- sync 1

P(S2); // -- sync 2

}

}

entity(two) {

P(S2);

while (1) {

variable_delay();

P(S1); // -- sync 1

received_data = shared_data;

V(S2); // -- sync 2

}

}

Handshake

We now define a hardware implementation of a synchronzation point. The key difference between hardware and software is that shared memory is much less sophisticated. We don’t have semaphore primitives that can be called to halt the hardware. We only have input and output wires.

A handshake protocol is a signalling sequence between two concurrent entities with the purpose of achieving synchronization. You can think of a handshake in the real world: it takes two people in a concerted effort to shake hands!

Consider two independent modules (hardware or software) that will synchronize in order to transfer a data item from one module to the other. We distinguish two different roles for each each module.

-

First, one module will be the initiator of the handshake, while the other will react in response to steps taken by the other module. We will call the former module a master, and the latter module a slave. The concept of master and slave occurs in many different situations that rely on synchronization, including bus protocols and communication protocols.

-

Second, the data will flow from one module to the other. The make the terminology non-ambiguous, we will look at the direction of the data near the master. If the master sends data to a slave, we will call it a write operation. If the master receives data from the slave, we will call it a read operation.

With this terminology, we can now define two implementations for a handshake: a one-way handshake and a two-way handshake. These two cases have some resemblance to the single-sempahore and two-semaphore syncrhonization cases discussed earlier.

In the following, we will assume that the two modules to be synchronized both are implemented in hardware, and that both modules are driven by a common clock. Hence, we focus the discussion on synchronous implementations of the handshake protocol.

One-way handshake

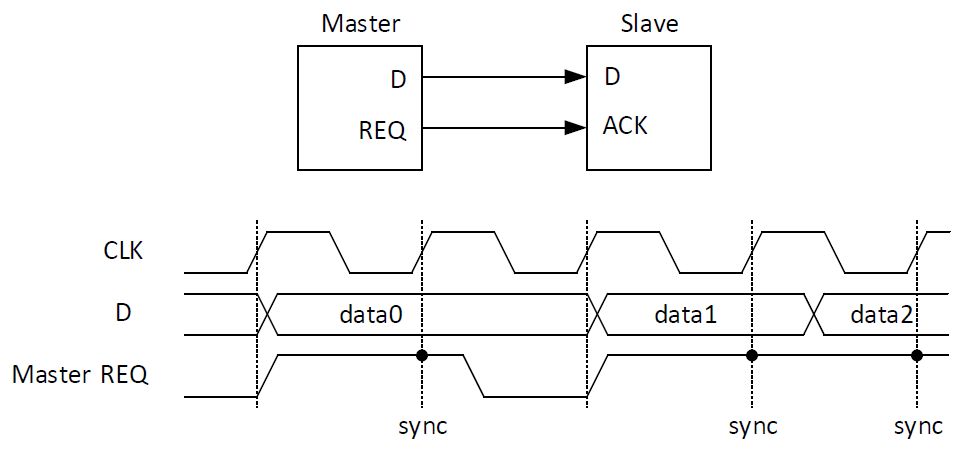

Figure: One-way Handshake

A one-way handshake requires one control wire, called request, from the master module to the slave module. To transfer a data item, the master will assert the the control wire. The handshake only takes a single clock cycle to complete, since the slave has to grab or deliver data whenever request is high, and the next clock edge arrives. This protocol is reminiscent of the protocol used in the MSP-430 peripheral bus. The following figure illustrates the sequence of operations.

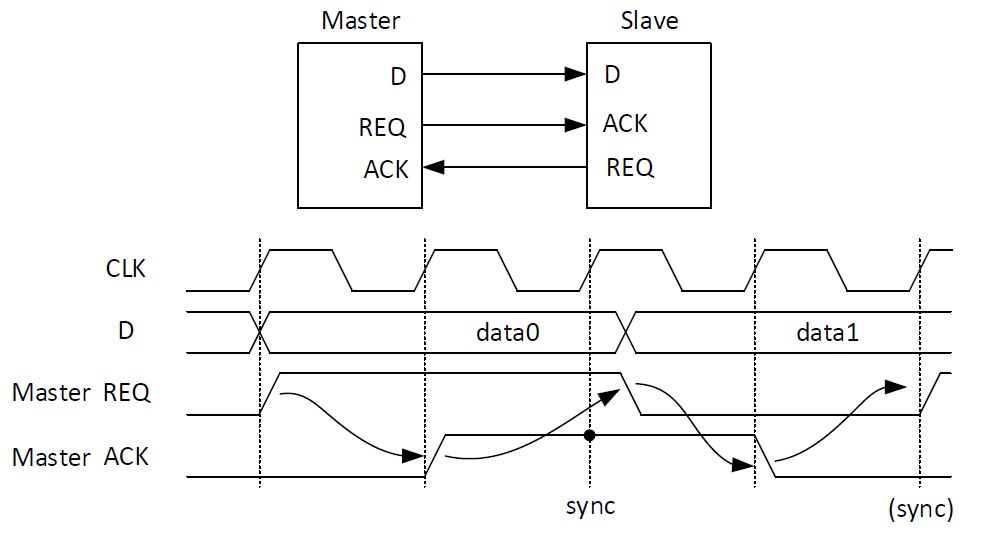

Two-way handshake

Figure: Two-way Handshake

A two-way handhake requires two control wire, one called request and the other called acknowledge. The former wire runs from the master to the slave, while the latter runs from the slave to the master. It is common to reverse the roles for request and acknowledge at the slave side. Thus, the master request is connected to the slave acknowledge, and the slave request is connected to the master acknowledge. A synchronization point is defined as both contorl wires taking the same value. However, each of the master and the slave must follow the rules of the two-way handshake. The slave can assert the master acknowledge only after seeing a master request. The master can de-assert the master request only after receiving master acknowledge. We will assume that, in our synchronous implementation, asserting/deasserting a control wire takes at least one clock cycle. Therefore, it will take four clock cycles to run through the entire protocol. During these four clock cycles, we encounter two synchronization points: the first when both request and acknowledge are asserted, and the second when both request and acknowledge are de-asserted.

Handshake Synchronization Primitives

The two-way handshake protocol can be broken down in four smaller components that can be used as building blocks to implement synchronized communications. Imagine two concurrent entities, entity(master) and entity(slave), each of them executing an infinite loop running through the two-way handshake protocols. To make the distinction between master and slave request and acknowledge easy, we make use of four different signals: mREQ and mACK on the master side, and sREQ and sACK on the slave side. mREQ is connected to sACK, while mACK is connected to mREQ. The following is a two-way handshake sequence.

entity(master) {

repeat forever {

mREQ = 1;

WHILE (mACK == 0) ;

// SYNC

mREQ = 0;

WHILE (mACK == 1) ;

// SYNC

}

}

entity(slave) {

repeat forever {

WHILE (sACK != 1) ;

sREQ = 1;

// SYNC

WHILE (sACK != 0) ;

sREQ = 0;

// SYNC

}

}

There are thus four different signalling sequences, each of them testing an ACK input and asserting a REQ output. Let’s call these four primitives mSYNC1, mSYNC0, sSYNC1 and sSYNC0. The two-way handshake is now simplified: each mSYNC1 matches up to a sSYNC1, and each mSYNC0 matches up to a sSYNC0. Furthermore, the mSYNC1 needs to alternate with mSYNC0, and the sSYNC1 needs to alternate with sSYNC0. Ignoring an mSYNC0 after an mSYNC1, for example, will break the two-way handshake protocol.

entity(master) {

repeat forever {

mSYNC1;

// SYNC

mSYNC0;

// SYNC

}

}

entity(slave) {

repeat forever {

sSYNC1;

// SYNC

sSYNC0;

// SYNC

}

}

Communications using Handshakes

A two-way handshake is well suited to implement the communication of a token stream from a master to a slave (write), or from a slave to a master. The slave and the master will exchange data through a shared memory. For example, this could be a memory-mapped register, in the case of a hardware-software communications link.

The general rule for synchronized communications is as follows: write into the shared memory before the synchronization point, and read from the shared memory after the syncrhonization point.

Here is an example where the master writes one data item for protocol iteration to the slave.

int shared_data;

entity(master) {

repeat forever {

shared_data = ...

mSYNC1;

mSYNC0;

}

}

entity(slave) {

repeat forever {

sSYNC1;

received_data = shared_data;

sSYNC0;

}

}

This can be extended to more complicated sequences. For example, let’s say that the master needs to send to arguments to a slave, and wait for a result computed by a slave. We can implement this with a single shared variable as follows. Thanks to the synchronization primitives, the master and the slave will iterate in lockstep, and the correctness of the result is independent of the time it takes for the master or slave to compute or complete steps in the synchronization protocol.

int shared_data;

entity(master) {

repeat forever {

shared_data = argument1;

mSYNC1; // -- (1)

shared_data = argument2;

mSYNC0; // -- (2)

mSYNC1; // -- (3)

result = shared_data;

mSYNC0; // -- (4)

}

}

entity(slave) {

repeat forever {

sSYNC1; // -- (1)

received_argument1 = shared_data;

sSYNC0; // -- (2)

received_argument2 = shared_data;

result = compute(received_argument1, received_argument2);

shared_data = result;

sSYNC1; // -- (3)

sSYNC0; // -- (4)

}

}

Implementing the two-way handshake

The master has an output mREQ and an

input mACK. The slave uses an output sREQ and an input sACK. Two synchronization

wires connect mREQ to sACK and sREQ to mACK, respectively.

The complete handshake involves four phases.

-

The master asserts

mREQand blocks untilmACKbecomes asserted. -

The slave waits until

sACKbecomes asserted, and assertssREQin response. -

The master de-asserts

mREQand blocks untilmACKbecomes de-asserted. -

The slave waits until

sACKbecomes de-asserted, and de-assertssREQin response.

During execution of the two-way handshake protocol, the master and the slave synchronize two times: when both mREQ and mACK are

asserted, and when both mREQ and mACK are de-asserted.

We can describe the activities of master during the two-way handshake using pseudocode as follows.

master input mACK;

master output mREQ;

mSYNC1:

mREQ = 1;

while (mACK != 1) ; // wait

mSYNC0:

mREQ = 0;

while (mACK != 0) ; // wait

Similarly, we can describe the slave activities using pseudocode as follows:

slave input sACK;

slave output sREQ;

sSYNC1:

while (sACK != 1) ; // wait

sREQ = 1;

sSYNC0:

while (sACK != 0) ; // wait

sREQ = 0;

To design synchronized communication between the master and the slave, we can use the handshake primitives, keeping in mind that shared storage must be written before the synchronization, and it must be read after the synchronization. Furthermore, xSYNC1 always has to alternate with xSYNC0; for every xSYNC1 you have at add a matching xSYNC0 to maintain a valid two-way handshake protocol.

Example: A hardware slave read by a software master

The synchronization primitives are implementation agnostic; you can map them in hardware using Finite State Machine logic, or in software using C programming. As long as you implement the handshake rules correctly, the resulting system will always complete the two-way handshake

Consider the following example. A hardware module produces a stream of samples. That stream has to be read by a software module. We assume that there are no real-time constraints, as long as the entire stream of samples is correctly read.

This design is well suited for a two-way handshake protocol. Let’s make the overall design decisions based on the properties we observe.

-

Data flows from the hardware to the software. We will use a memory mapped register D that is written by the hardware and read by the software.

-

To implement the synchronization signalling, we use two memory-mapped registers, R and A, each one bit wide, which are placed between the hardware and the software. The input of R is

mREQ, while the output issACK. The input of A issREQwhile the output ismACK.

The software reads the stream of samples as follows. Note that the software can read in two samples per handshake phase.

Figure: Synchronization Point Example

#define D (*(unsigned volatile *) address_of_D_register)

#define R (*(unsigned volatile *) address_of_R_register)

#define A (*(unsigned volatile *) address_of_A_register)

void main() {

unsigned cnt = 0, stream[SIZE];

while (1) {

R = 1; // mSYNC1

while (A != 1); //

stream[cnt] = D; // read shared D after sync

cnt = (cnt + 1) % SIZE;

R = 0; // mSYNC0

while (A != 0); //

stream[cnt] = D; // read shared D after sync

cnt = (cnt + 1) % SIZE;

}

}

The hardware writes the stream of samples as follows. We have abstracted out

the memory-decoding logic, and instead assume a hardware module

with a D output, an R input and an A output. Also, the values

NEWVALUE_V1 and NEWVALUE_V0 represent two samples of the stream.

Additional coding will be needed to extend this to a longer sequence.

module hwstream(input rst,

input clk,

output [31:0] D,

input R,

output A);

localparam S0 = 0, S1 = 1;

reg [1:0] state, statenext;

always @(posedge clk)

state <= (reset) ? S0 : statenext;

always @(*)

begin

statenext = state;

case (state)

S0: if ( R) statenext = S1;

S1: if (~R) statenext = S0;

endcase

end

assign A = (state == S0) ? 1'h0 : 1'h1;

assign D = (state == S0) ? NEWVALUE_V1 : NEWVALUE_V0;

endmodule